Vandalism

Vandalism is intentionally ignoring the consensus norms of the OpenStreetMap community about editing data. Simple mistakes and editing errors are not vandalism but may need to be reverted using some of the same tools that are used for vandalism.

See abuse for discussion of some other types of violation of consensus norms of the community.

Vandalism and other types of bad editing

Vandalism in the purest sense would be acts of "graffiti" on the map, carried out with the motivation of childish fun or as a purposeful attempt to damage OpenStreetMap. It's relatively rare to see occurrences of pure vandalism. OpenStreetMap is a not-for-profit good cause, and the map data is "owned by" the community. On the whole people tend to have respect for that.[1]

There are various other types of bad editing which are very similar to vandalism, carried out with various motivations beyond childish fun, but resulting in the need for the same types of remedies. These include:

- Copyright infringement

- Disputes within the OpenStreetMap database (edit wars)

- Disputes on the wiki

- Inappropriate use of Bots

- Persistent disruptive behaviour

- Spamming

- Purposeful removal or degradation of data that are known to be correct

- Deliberate adding incorrect data

- Undiscussed imports.

- insulting or contentless notes

- repeatedly opening notes that were validly closed

Simple mistakes and editing errors are not vandalism, although some may need to be reverted.

Vandalism response

Info for beginners

If you see mistake or some damage to map (malicious or not) you may be confused/unsure what and how should be done. In such case as usual contact other mappers and ask for help. But maybe info on this page will help.

General guidelines

The view from a discussion on talk in September 2009 was:

- We should expect that all contributors should at all time attempt to make good, accurate and well researched changes

- We need to ensure that every contributor is on-balance making the dataset better, not worse. If the contribution is in doubt we owe it to other contributors to investigate and respond.

- We should be aware that people make mistakes, need time to learn and newbies often need and will respond to support

- We can request, but not require contributors to add comments to their changesets and to have created a useful personal page with some details about their interest and knowledge. Doing this makes reversion less likely and make it more likely that the person will be helped if needed.

- In the event that someone seems to be doing strange edits one should initially assume "good faith" but should watch carefully and discuss with others if appropriate.

- If a significant number of edits to ways can be definitively proved to be malicious, obscene, libelous or it is considered that they might bring the project into disrepute then the related change-sets can be reverted immediately without discussion and without 100% checking of the rest of the change-set.

- If the edits are dubious but it can't be proved to be incorrect then we should contact the person and ask for some additional information. If we don't get a reasonable response (or gets no response) and the dubious edits continue and there are not a good number of balancing clearly positive contributions then we should look to prove at least one bad edit and may then come to the decision in discussion with others that it is appropriate to revert the change-set in question and potentially all changesets by that person.

- Once someone has been identified as a problematic contributor then one only needs to perform a brief of inspection of subsequent edits before reversion future changesets.

- If the problem continues then we put them on "virtual ban" where their edits get reverted with no inspection of the merit of the changes unless the person contacts a sys-admin and says they have grown-up and want another chance.

- If someone performs bad edits in any part of the world then they can expect to be a global response because it seems very unlikely that someone would mess with Ireland and do good work in Iceland and I am not sure I would want to work out what was going on in their head — I would prefer to protect the good work of others from mischief that allow good work to be messed on the off-chance that some good edits are also made in amongst the nonsense.

- People who revert other people's work should expect to be able to demonstrate that the reversion was well reasoned and proportionate to the issue.

- Sometimes "null" edits — ones which have no purpose other than to "touch" the element — get made by mistake, by the editor or are useful for updating an area. However, large numbers of "null" edits will be considered disruptive behaviour.

Normal revert

If the extent of the vandalism is local and impact for the overall community is limited and it is likely that edit was not malicious then make polite direct human contact via OSM Messaging or changeset discussions assuming good intentions and wait for 24-48 hours for a response. If an adequate response is not received then it may be appropriate discuss the issue on an appropriate email list (normally the local, national or regional list) or with trusted individuals. If some edits can be proven to be counterproductive and helpful edits are not obvious then the changesets in question should be reverted.

Speedy revert

If a significant number of edits to ways can be definitively proved to be malicious, obscene, libelous or it is considered that they might bring the project into disrepute then it is important to respond immediately and revert first and ask questions to the contributor in parallel. It may also be appropriate to contact the Data Working Group at the same time. Objects that have been touched since the initially bad edit may require using a complex reversion method, so if you are unfamiliar with these tools then seek assistance from the community or Data Working Group.

Temporary block

If, after contact has been made with the mapper via changeset discussions and/or messages, the mapper refuses to respond and does not alter their behavior, the Data Working Group may place a temporary block on the user in question. This is neither a presumption of guilt nor a banishment from the project. Rather, it is a measure to get the attention of the mapper as they must log in to the OSM site and read the block message before they can continue uploading edits through the API.

Temporary blocks come in varied time lengths, typical length is 0 to 96 hours. They also have the capability to require a user to log in and read the block message before it is removed, regardless of expiration time.

DWG members will often place a 0 hour block on an account with the block message read condition as an initial measure to unresponsive conflicting mappers.

A history of blocks is available here.

Long-term block and permanent ban

See the OSM Foundation ban policy for more information.

Governance

Where possible the local OpenStreetMap community should resolve vandalism through the above processes.

Data Working Group

- Main article: Data working group

The Data Working Group is authorised by the Foundation to deal with more serious vandalism and reports to the board at the monthly board meetings. If blocking account is necessary the issue should be reported to the data working group (data@osmfoundation.org).

Data Working Group should be also contacted if problem requires hiding some parts of history of edited objects.

Development

There are a number of possible tools and techniques that could be used to manage vandalism issues. Possible tools include:

- White lists

- Data rollback — Let the community run this task using tools that have been tested

- Data deletion — Let the community run this task using tools that have been tested

- Change rollback (revert)

- History removal — Requires high level privileges

- Rollbacks

- Data with later history

Detection

- Main article: Detect Vandalism

There are tools available to revert edits for particular change-sets as long as further edits have not subsequently been made to any of the relevant features. These tools are currently hard to use, and require good technical knowledge to operate without causing further damage.

Detecting vandalism within OSM is a tricky situation as the types of vandalism above are wide ranging in type, extent, and effect. OSMCha is one of tools that can be used for that.

There are multiple approaches to automated vandalism detection that generally fall within two categories: changeset focused and user focused. But, as in the case of any detection system, either focus will often have false positives or miss "smart" vandals.

Changeset focused

Changeset focused detection relies on scanning changesets for nefarious behavior by examining the metadata of the changeset and perhaps the actual objects or nature of tags used. One common method employs limits on the amount of objects edited, added, or deleted. An example program in pseudocode:

for changeset in diff file:

if more than 1000 objects are added:

flag as a potential import

if more than 1000 objects are modified:

flag as a potential mechanical edit

if more than 1000 objects are deleted:

flag as a potential vandal

This type of scanning lends itself to the idea that users generally do not edit a large amount of objects in every day mapping. But, in the above example, all events are flagged "potential" as there are often very reasonable explanations for performing so many actions (e.g. authorized imports, reversions of bad edits, etc.). It also would not detect any small scale vandalism and any vandalism split into multiple changesets.

In the changeset approach, tags can also reveal potentially bad behavior. Listing copyrighted data as a source is usually a red flag, though some users unfamiliar with the source of default imagery like Bing may list a generic trademark (e.g. Google, Garmin) creating a false positive. Using very outdated or uncommon editors, adding very long tag values, including many extraneous changeset tags, and other behaviors are other examples of leveraging tags in detecting bad edits.

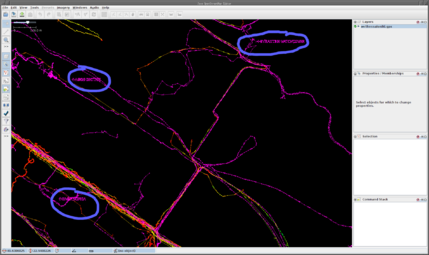

Another component of the changeset approach is examining the spatial characteristics of the objects in a changeset. Added or edited objects could be compared to samples of smiley faces, drawn out words, anatomical features, and doodles to test how similar they are to this list of graffiti.

User focused

User focused detection looks at the editing character of the individual. Did a user make 300 edits within 10 minutes of joining? Are all edits of a new user deletions? This detection aims to catch the behavior of the individual instead of the characteristics of the objects.

Legal situation

Many countries have laws against cyber crimes that cover "modifying data in a computer system and thereby causing damage" or similar. There's no known case of someone being taken to court or even convicted for vandalising OSM but in theory these laws could apply even to those who persistently add "graffiti" to OSM, particularly ones evading permanent or long-term blocks.

See also

- Abuse

- DMCA Designated Agent

- Quality assurance

- Spam

- Report user

- What's the problem with mechanical edits?

External links

- Wikipedia vandalism on Wikipedia

References

- ↑ There is 'OpenGeofiction' as a cartography site that can create virtual maps in a style similar to OSM.