OSM Conflator

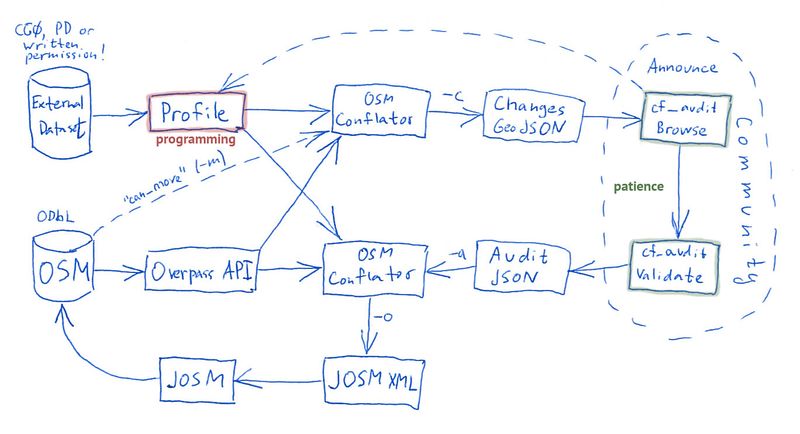

OSM Conflator is a python script to merge a third-party dataset with coordinates and OpenStreetMap data. It produces an JOSM XML or osmChange file ready to be validated and uploaded, and a preview file for validating the changes. The script was inspired by Osmsync, and to repeat the warning from its page, as with any other automated edit, it is essential that people read and adhere to the Automated Edits code of conduct.

The script was made by MAPS.ME and was developed on Github https://github.com/mapsme/osm_conflate until 2019. It was not updated since then by Organic Maps team but copy exists also at https://github.com/organicmaps/osm_conflate

How It Works

First, it asks a profile (see below) for a dataset. In a simplest case (which even does not require you to write any code), a dataset is a JSON array of objects with (id, lat, lon, tags) fields. "Tags" is an object with OpenStreetMap tags: amenity, name, opening_hours, ref and all that. Some of these are marked authoritative: when merging, their values replace values from OSM. For example, we may be sure that parking zones are correctly numbered in the source dataset, but we allow mappers to correct opening hours.

Then the conflator makes a query to the Overpass API. It uses a bounding box for all dataset points, and a set of tags from which it builds a query. Alternatively it can use an OSM XML file, filtered by query tags and, if needed, more vigorously with a profile function.

Matching consists of several steps:

- If the dataset has a dataset_id, the script searches for OSM objects with ref:dataset_id tag (e.g.

ref:mos_parking=*) and updates their tags based on dataset points with matching identifiers. Objects with obsolete identifiers are deleted. - Then it finds closest matching OSM objects for each dataset point. Maximum distance is specified in the profile and can vary depending on a dataset quality. Tags on these objects are also updated, minding the authoritative keys list.

- Unmatched dataset points are added as new nodes with a full set of tags from the profile.

- Remaining OSM objects are either deleted, or few tags are added to these, like

fixme=This object might have been dismantled, please check.

Finally, the conflator produces an osmChange file out of the list it prepared, and writes it to the console or to an output file.

Usage

You would need Python 3. Install the command-line tool and its dependencies (kdtree and requests) with:

pip install osm_conflate

(Note for macOS: You can install Python via brew install python and then use pip3 install osm_conflate.)

Then run conflate -h: it will give you an overview of the options. It is recommended to run the conflation with these options:

conflate <profile.py> -o result.osm -c preview.json

After testing the importing process, you should follow the import guidelines. At the least, you should post to your regional mailing list or regional forum, and/or to the imports@ mailing list:

- What are you planning to import.

- Why the license for the dataset is compatible with OSM contributor's terms (CC0 and PD sources are okay, CC-BY and more restrictive licenses would require a permission from an owner).

- How many relevant objects there are in OSM now, how many will be altered and how many will be updated (the conflator prints these numbers).

- Link to the profile you are using, if relevant.

- Link to a preview map. Go to geojson.io, open your json file and press "Share".

- A date for the final import, if there are no major objections. Give it a week or two, depending on an import size.

To store the downloaded OSM dataset, so you are not blocked by the Overpass API when testing a profile, use --osm key. Do not forget to remove it when preparing the final file for uploading.

Previewing changes

Use --changes key to write a GeoJSON file with changes. It is meant to be opened in geojson.io or similar services that use the marker-color property. You will see a lot of colored markers:

- Light green: no match in OSM was found, a new object was created.

- Light blue: an OSM object was found, and its tags were modified.

- Light red: OSM object had "ref:dataset" tag, but was missing in the new dataset and was deleted.

- Dark red: OSM object could not be deleted and was retagged instead.

- Dark blue: OSM object had "ref:dataset" tag and was moved, because the point in the dataset was moved too far.

Properties of these object will have all information needed to decide on correctness. Besides original and modified tags, there will be ref_distance key with a distance in meters from the dataset point, ref_coords with the original coordinates, and ref_unused_tags with dataset tags that did not make into the new object, being inferior to the OSM tags.

Uploading

The simple way is to load the resulting XML file into the JOSM editor. It is also the preferred method, since you will have changeset tags pre-filled. Also you would need to create a separate account and configure it in the Preferences dialog (F12, then "World" tab). Finally, press "Upload" (green arrow up) in the editor, and type a comment describing the data.

Alternatively you can use a command-line tool bulk_upload.py. Please do not skip the changeset comment option.

Profiles

Profile is a python file (much like regular configuration files, if you don't use functions). Alternatively you can use json object. There are example profiles, some of which were used for actual imports, in the profiles directory. Do study these, starting with the moscow_parkomats.py.

The conflator asks a profile for the following fields, any of which can be python functions:

- dataset

- A function that should return a list of dicts with 'id', 'lat', 'lon' and 'tags' keys. It is provided with a file object for the first parameter, either an actual file or a wrapper to downloaded data.

- download_url

- When there is no source file, data is download from this URL and passed to the dataset function.

- transform

- A dict or a function to transform tags in the dataset. The function receives a dict of tags for modifying, for the dict format see below.

- duplicate_distance

- Dataset points that are closer than this distance (in meters) will be considered duplicates of each other.

- query

- A list of key-value pairs for building an Overpass API query. These tuples are processed like this:

- ("key",) → ["key"]

- ("key", None) → [!"key"]

- ("key", "value") → ["key"="value"]

- ("key", "~value") → ["key"~"value"]

- qualifies

- A function that receives a dict of tags and returns True if the OSM object with these tags should be matched to the dataset. All objects received from Overpass API or OSM XML file are passed through this function.

- bbox

- A bounding box for the Overpass API query. If True (the default), it is produced from all the source points. If False, it is the entire world. Specify a list of four numbers to use a custom bounding box: [minlat, minlon, maxlat, maxlon].

- max_request_boxes

- When preparing an Overpass API query, the conflator calculates not one bounding box covering all the dataset points, but many smaller boxes (example). This prevents from querying the whole world for objects, instead making a few queries for separate groups: e.g. for different countries. This parameter controls how many bounding boxes at the most will be constructed. The default is four.

- bbox_padding

- Pad calculated bounding boxes by this value, in degrees. Default is 0.003.

- overpass_timeout

- Timeout for Overpass API queries. Set to None to use the default Overpass API value.

- dataset_id

- An identifier to construct the ref:whatever tag, which would hold the unique identifier for every matched object.

- no_dataset_id

- A boolean value, False by default. If True, the dataset_id is not required: the script won't store identifiers in objects, relying on geometric matching every time.

- find_ref

- A function to extract dataset id from OSM tags. Useful in case you have no_dataset_id = True, but ids are encoded in e.g. website values, and you want to match on these.

- bounded_update

- A boolean value, False by default. If True, objects with ref:whatever tag are downloaded only for the import region, not for the whole world.

- regions

- Set to anything but None to geocode dataset points. 'all' geocodes down to regions, 'no' for countries only. See below for description.

- max_distance

- Maximum distance in meters for matching objects from a dataset and OSM. Default is 100 meters.

- weight

- A function to calculate weight of an OSM object. The only parameter is the object with e.g. tags and osm_type fields. It should return a number, which alters a calculated distance between this and dataset points. Positive numbers greater than 3 decrease distance (so priority=50 for a point 90 meters away from a dataset point makes it closer than an OSM point 45 meters away), negative numbers increase distance. Numbers between -3 and 3 are multiplied by max_distance.

- override

- A dictionary of "dataset_id: string", where string is an id of an OSM object (in form "n12345" or "w234"), or a name of a nearby POI. These should exist in the downloaded OSM data.

- matches

- A function that gets OSM object tags and dataset point tags, and returns False if these points should not be matched.

- nearest_points

- Iterate over this many nearest points, finding the one to pass matches function and categories check. Default is 10, which is enough for most cases.

- master_tags

- A list of keys, values of which from the dataset should always replace values on matched OSM objects.

- source

- Value of the

source=*tag, if added.

- add_source

- If True, add or modify source tags on touched objects.

- delete_unmatched

- If set to True, unmatched OSM points will be deleted. Default is False: they are retagged instead.

- tag_unmatched

- A dict of tags to set/replace on unmatched OSM objects. Used with delete_unmatched is False or an object is an area: areas cannot be deleted with this script.

Inheriting profiles

When you have multiple dataset coming from a single source, and you want to process them all the same, but tweaking a few tags or a query, then you might need a "parent" profile to inherit from. Inheritance won't work with files, but you can reverse the chain and call OSM Conflator from your script, supplying a profile in whatever form you like.

To do that, you need a runnable script that imports conflate library and executes conflate.run(profile). A profile here can be a dict, or a static class. Both options allow you to override properties: either by modifying dict values, or by overriding fields and method of a class.

Example:

#!/usr/bin/env python3

import conflate

from random import randint

class Random:

no_dataset_id = True

download_url = 'http://example.com'

def random_points(vmax, count):

return [conflate.SourcePoint(i, randint(0, vmax), randint(0, vmax)) for i in range(count)]

class Profile(Random):

source = 'Random'

query = [('amenity', 'fuel')]

def dataset(f):

return Random.random_points(9, 1)

conflate.run(Profile)

Note that we are calling run() with a class definition, not its instance. When running this script, you would notice that all the arguments from conflate.py are still supported, except the "profile" argument, which now cannot be supplied.

See this profile for a real-world example.

Transforming tags

If you prefer to process dataset attributes without writing code, or need to fix small issues in the properly formatted JSON source, use the transform field. It usually is a dict with OSM keys for keys, and rules for values. The rule for a key can be:

- Empty: does nothing. But if you add a processor, e.g. 'operator': '|lower', then it would alter the tag value. In this example, it will be made lower-case. There are no other processors at the moment.

- Function or lambda: calls the function with one parameters, tag value (None if absent). Replaces the value or deletes the tag, if the function returned None.

- Number or a non-prefixed string: replaces the value with a given one.

- "-": deletes the tag.

- ".key": takes the value from another tag.

- ">key": changes the key to another one. "<key" also works, in the reverse direction.

For example, this part of a profile converts postal_code=* to addr:postcode=*, removes name=* and formats phone=* for the UK:

def format_phone(ph):

if ph and len(ph) == 13 and ph[:3] == '+44':

if (ph[3] == '1' and ph[4] != '1' and ph[5] != '1') or ph[3:7] == '7624':

return ' '.join([ph[:3], ph[3:7], ph[7:]])

elif ph[3] in ('1', '3', '8', '9'):

return ' '.join([ph[:3], ph[3:6], ph[6:9], ph[9:]])

else:

return ' '.join([ph[:3], ph[3:5], ph[5:9], ph[9:]])

return ph

transform = {

'postal_code': '>addr:postcode',

'phone': format_phone,

'name': '-'

}

Geocoding

The conflator has a built-in offline geocoder, which adds a "region" field to the produced GeoJSON changes file. Technically it uses a KD-Tree to find a nearest village or town, and these have a country and a region each. Encoded are around 1.1 million places from OpenStreetMap, which should be enough.

To enable the geocoder, add regions field to the profile. It can have one of the following:

- True or 'all' or 4: geocode down to regions, use their ISO 3166-2 codes.

- False or 'countries' or 2: use only country ISO 3166-1 codes.

- 'some': regions for US and Russia, country codes for other countries.

- A list of uppercase ISO 3166-1 codes: regions for these countries, country codes for others.

When you upload a resulting GeoJSON file to the auditing tool (see below), you project will have a dropdown field prompting users to limit their validation work to just one region or country.

After validation (or without it), you may need to import just points for a certain countries or regions. Specify these with the -r command-line argument as a comma-separated list.

JSON Source

You don't need to know python to prepare the data for conflation. The dataset profile function is optional. Instead, you can use -i option to specify a JSON file with all the data. The file should be a JSON array that looks like this:

[

{

"id": 123456,

"lat": 1.2345,

"lon": -2.34567,

"tags": {

"amenity": "fuel",

...

}

},

...

]

So, a list of objects with "id", "lat" and "lon" properties, that is, coordinates and a unique identifier from your database. The "tags" property should contain OpenStreetMap tags to set on the objects. All you need is to write a program in any language to convert your database contents into such form. With PostgreSQL, you can even do that in SQL.

Community Validation

You should not upload any imports to OpenStreetMap without discussing them first. In case of conflated points, you have a GeoJSON file to show, so your fellow mappers can find mistakes in tagging and estimate the geometric precision of new points. You can do even better: ask an opinion for each of the imported points. For that, you would need to install Conflation Audit web application. Ilya's instance with a demo dataset to experiment on is set up at audit.osmz.ru.

to write

Audit JSON

After all the points have been validated, click "Download Audit" link. You will receive a JSON file with items that look like this:

{

"REF12": {

"move": [-2.033705, 52.036851],

"override": ["addr:postcode", "addr:housenumber"]

},

"REF13": {

"skip": true

},

"REF54": {

"move": "dataset",

"keep": ["brand", "operator"],

"fixme": "There is a duplicate KFC to the west"

},

...

]

There are six possible members for an object:

- "move" with "osm" / "dataset" / [lon, lat];

- "keep" and "override" lists of tag keys;

- "fixme" with a fixme tag value;

- "skip": true for objects to not be imported;

- "comment", now unused, with a reason for skipping an object.

Pass this file to OSM Conflator with -a parameter, and it will apply changes to the new OSM file.

On subsequent imports, do not use the audit JSON for a new changes JSON. Upload the old audit JSON along with the new changes JSON to the auditing tool, and after a while you'll get a new audit JSON ready to be used.