Lévis/Proposed Import of STLévis GTFS

This page describes the proposed import process of GTFS data for the STLévis bus service provider in Lévis, Quebec, Canada.

Community Buy-in

Forum thread: https://community.openstreetmap.org/t/importing-stleviss-bus-routes-stops-data-in-levis/111372/2

talk-ca: https://lists.openstreetmap.org/pipermail/talk-ca/2024-April/010978.html

Discord: https://discord.com/channels/413070382636072960/471231102380408832/1225492330497446059

Slack: https://osm-ca.slack.com/archives/C371E794M/p1712354484415109

License Approval

The license of the data is CC-BY 4.0, a license waiver has been granted. The project will be added to the Imports Catalogue.

Before Starting

The current state of the network (before import) is shown in the image to the right. There are very few stops and a total of 2 routes mapped, 1 of which is incomplete. Nevertheless, I corrected the tag information with respect to PTv2 requirements as well as GTFS requirements manually to make the existing data handling during import easier. This was only possible because there was very little already mapped. In the future, when updating the network using a bulk import, there should be no need to correct things manually because everything should be identical or very close to PTv2 and GTFS standards already.

Proposed Import Process

I propose to do the import using a Python script that generates JOSM XML files, handles conflation within the GTFS dataset and with respect to existing OSM data automatically. It doesn't delete any existing data but rather adds action=modify tag to existing data if need be and preserves all existing ids. It also makes sure changes to existing data also cascade to relation members. The script takes a STLévis GTFS zip file as input and executes the steps summarized below (latest from here). The script is a work in progress and it meant as tool to help and not fully automate everything. It can accessed here.

- Unzips the GTFS archive and load the data in to memory.

- Using the calendar_dates.txt file/data, it finds all the service_id that correspond the latest date that is a weekday, in order to ensure only the latest data and longest trips will be imported.

- Filters the whole GTFS dataset keeping only data that corresponds to the latest service_id.

- Writes the GTFS stops to GeoJSON for visualization (to spot apparent problems and potential problems).

- It downloads existing OSM data using overpy. This includes existing bus stops (nodes), route relations, and route_master relations along with the member nodes and ways. It converts the data into an internal format to be able to work with it in the script.

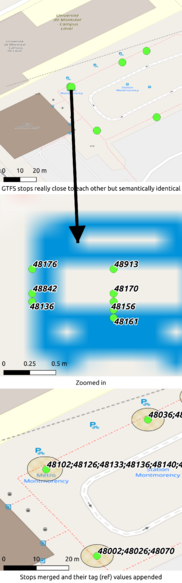

- Handles conflation within the GTFS dataset stops.

- Creates new OSM nodes for the stops making sure to not duplicate or delete existing stops (explained in detail below).

- For each trip (trip_id) for a given route (route_id), it finds the longest trip for each direction (the one with the most number of stops).

- It creates the OSM routes and route_master=* relations using the identified longest trips using PTv2 recommandations, except for the name=* where it will be in the

Parcours {route_ref} vers {trip_headsign}format. It handles conflation with existing relations (explained in detail below). - Write the JOSM XML files (nodes and relations)

Conflation handling with existing stops in OSM

In a similar fashion conflation between already merged GTFS stops and existing OSM stops is handled by checking if there are any existing stops in OSM that are with x meters (distance used was 5 meters) of each GTFS stop. If there are, the tags of the existing OSM stop and coordinates are replaced by the corresponding information of the GTFS stop keeping the existing OSM id and other information intact. In a single case, there was more than one existing OSM stop in the vicinity of a GTFS stop. I handled this case manually and reran the script. This check (checking of existing stops in proximity to GTFS stops) is only done with existing stops that are within the boundaries of the Urban agglomeration of Québec.

Conflation handling with existing relations in OSM

Once the route relations are created by the script, it compares them with existing route relations that match the name, operator, and ref tags from OSM when applicable. Any matching existing route relation gets assigned new tags (from the GTFS) dataset and the member nodes are all replaced with new nodes (that are a result of the stop creation and conflation handling from the previous steps). The member ways remain intact as they are better handled manually in JOSM (if there is a need to change/update them).

Route_master relations are handled in a similar fashion where existing route_master relations all get new tags and in this case the member relations are completely replaced by new ones (already created and conflation handled in the previous steps).

During any conflation handling with existing data (nodes, or relations), the existing node, way, or relation, gets a action=modify tag written in the output XML.