User:Stanton/Mapnik Test Server

This page is a summary of the steps to set up a small test server for playing with Mapnik.

It will be used for personal rendering tests, and I opted for an approach that would get me started as fast as possible. Hence the setup is by no means dimensioned for serving large amounts of tiles or optimized for performance. Things I intend to use the machine for are:

- an OSM Transport Map, originally limited to one city,

- a piste map for a few resorts in the European Alps,

- some renderings of openBmap data.

I try to avoid building any components from source if I can get a precompiled package, and did not look extensively into optimization. I mostly followed the setup instructions for Openptmap, though with some changes as some commands have changed over the course of Ubuntu releases. Also, for the reasons explained before, I installed a precompiled osm2pgsql package rather than building from source.

Code mentioned here can be found on the git repository.

I have built various incarnations of this server: I started out with a QEMU virtual machine and Ubuntu 10.10, then moved on to VirtualBox (which is significantly faster when host and guest have the same processor architecture) and Ubuntu 12.04, and finally to a physical machine since I had a spare one sitting around, also running Ubuntu 12.04. This article has been updated to reflect the latest setup.

Background

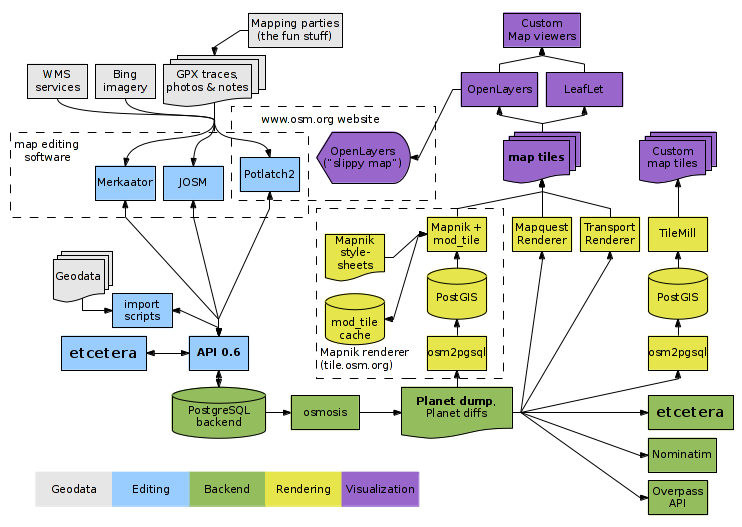

What we're talking about (image from Component overview)...

We'll be setting up the components in the Mapnik renderer box, plus some OpenLayers stuff on top. Our chain will be fed raw OSM data from the "bottom" and produce a map with a web UI (which comes out at the "top"). As you can see from the graphic, the rendering chain on the OSM site works in much the same way. Main differences are that it is fed regular Planet diffs from the main OSM database, and that tiles are (re-)rendered on demand - i.e. when they are requested for the first time after being modified.

Base System

Virtual Machine

For the virtual machines I followed the rule "half the host resources for the VM" for processor and memory. The setup I chose was:

- 20 GB hard disk space

- 1.5 GB memory (half the memory of the host system)

- 1 processor core

- user mode network stack (QEMU) / one network interface with NAT connection (VirtualBox)

- redirected local TCP port 2222 to guest's 22 (needed for SSH access, any available local port will do)

- redirected local TCP port 5432 to guest's 5432 (optional, allows you to connect to PostgreSQL from your host system - choose another local port if your host system is running PostgreSQL already)

- redirected local TCP port 8038 to guest's 80 (needed for HTTP access, i.e. browsing the map – again, the port can be chosen freely)

My first incarnation (3 GHz dual-core Pentium 4 with 1 GByte of RAM, with QEMU and the 50% rule applied) appears to be just around the bare minimum for such a setup - I would occasionally get error messages (failed to find root partition on boot, PostgreSQL failed to start on boot, database timeout while rendering) apparently related to performance as they all seemed related to some operation timing out. This appeared to occur more frequently when there was more load on the host system.

Physical Machine

- 180 GB PATA hard disk

- 3 GB memory

- 3 GHz dual-core Pentium 4

- 100 MBit Ethernet (on board)

The graphics card should not be much of a concern as the system will run in text mode and most of the time it will be accessed remotely.

Operating System

The system runs Ubuntu Server 12.04 in the 32-bit edition (the current LTS at the time of this writing). A 64-bit OS makes sense only if you later decide to upgrade the memory beyond the 4 GB boundary.

- Under Options, select "minimal server" to avoid installing unnecessary packages. This is an important step in hardening a system connected to the Internet – the more tools are installed on our machine, the more likely it is that a hacker can use some of them for his purposes.

- Enable LVM so you can add extra disks later if that is needed.

- During setup, select to install security updates automatically if this system is going to be exposed to the Internet (unless regressions are a major concern, in which case you should make sure that you check for updates regularly and install them on short notice).

- No additional packages were installed during setup (this will be done during configuration).

Setup

Network configuration

If you need a static IP address, configure it now. Login and edit /etc/network/interfaces. Change the entry for the eth0 interface as follows:

auto eth0 iface eth0 inet static address 192.168.42.43 netmask 255.255.255.0 gateway 192.168.42.1 nameserver 192.168.42.1

Replace IP addresses and net masks with parameters appropriate for your network.

Edit /etc/resolv.conf and add a line like the following:

nameserver 192.168.42.1

(again replacing the IP as appropriate). Then run:

sudo /etc/init.d/networking restart

Additional packages

Now it is time to install extra packages.

We will use osm2pgsql to import OSM data into PostGIS. However, the version included with Ubuntu 12.04 cannot yet handle 64-bit IDs, which will cause more recent planet dumps to fail on import. We therefore need to add an external repository with a recent version of osm2pgsql.

Create a file /etc/apt/sources.list.d/krueger.list with the following content:

deb http://ppa.launchpad.net/kakrueger/openstreetmap/ubuntu precise main deb-src http://ppa.launchpad.net/kakrueger/openstreetmap/ubuntu precise main

Then run the following commands to install the packages:

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys AE74800FB745A04C sudo apt-get update sudo apt-get upgrade sudo apt-get install openssh-server sudo apt-get install pm-utils sudo apt-get install apache2 php5 libapache2-mod-php5 subversion autoconf autoconf2.13 automake build-essential libxml2-dev libgeos-dev libbz2-dev proj libtool postgresql postgresql-9.1-postgis postgresql-contrib-9.1 postgresql-server-dev-9.1 libmapnik2-2.0 mapnik-utils python-mapnik2 osm2pgsql unzip

OpenSSH is not really needed but does come in handy for remote administration (especially when running on metal rather than on a VM) and file transfer. You can install it separately before the other packages (as shown here), then do the rest remotely.

By default, OpenSSH will not require public-key authentication but also allow password authentication. If you intend to use your server in an environment in which it can be accessed from outside (i.e. from a network you don't control), it is highly recommended to use public-key authentication: Copy your public key to the server (by doing ssh-copy-id user@host from your desktop machine and, once you have verified you can log in that way, disable password authentication in /etc/ssh/sshd.conf. Then restart sshd.

pm-utils is also not a strict requirement but comes in handy if you are accessing a physical server remotely, allowing you to suspend it via SSH, cron or other and resume it via Wake-on-LAN.

The osm2pgsql setup will offer to create a database. We will do this by hand later, so you can answer No here. Note that you will still be prompted for database parameters, just accept the default values.

PostgreSQL Database

We need to create a new user and initialize a new database. Follow these steps:

sudo -u postgres -i -H createuser -SdR mapuser createdb -E UTF8 -O mapuser mapdb # this is a letter (Oh), not a digit (zero) createlang plpgsql mapdb psql -d mapdb -f /usr/share/postgresql/9.1/contrib/postgis-1.5/postgis.sql psql mapdb -c "ALTER TABLE geometry_columns OWNER TO mapuser" psql mapdb -c "ALTER TABLE spatial_ref_sys OWNER TO mapuser" exit

To enable easy database login by user mapuser you must change some lines in one of the database configuration files. Having set up only a minimal server, I needed to resort to vi (after ten years of occasional use I have learned the basic moves). Feel free to use a more comfortable editor of your choice if you can.

sudo vi /etc/postgresql/9.1/main/pg_hba.conf

Near to the bottom of the file you will find these lines:

local all all peer host all all 127.0.0.1/32 md5 host all all ::1/128 md5

Change the words peer and md5 each to trust.

On a VM, if you plan to connect from your host system, add the following line:

Caution: I have not fully explored the security implications of the following step - it will definitely allow unauthenticated database access from the host system, and probably also from other systems on the same network. Do this only if your PC is on a secure network which you control, or if your PC is configured to block all incoming connections on that port, or if your database contains nothing but unimportant test data.

host all all 10.0.2.0/24 trust

The above is for my test system - run route to find out your VM's network configuration, and specify the address accordingly. Save the file and close the editor.

On a low-resource VM (such as my minimal QEMU) we will need to raise the lock timeout - this is to avoid errors caused by our little VM struggling with the load. On QEMU I didn't need it. Edit /etc/postgresql/9.1/main/postgresql.conf. Near the bottom of the file you will find a line:

#deadlock_timeout = 1s

This is a comment, 1s is the default value. Add another line below:

deadlock_timeout = 120s

You can experiment with that timeout if this doesn't help. The PostgreSQL documentation recommends setting it to the maximum duration you expect for a query and considers 1 second the absolute minimum.

If you are going to connect to PostgreSQL from other machines, you have to tell it to listen for incoming connections on all IP addresses (default is just the local loopback interface):

listen_addresses = '*'

Now save the config file, then restart the database (a simple reload will not do):

sudo /etc/init.d/postgresql stop sudo /etc/init.d/postgresql start

To find out if the network listener is working, run netstat -atun and watch for the following line:

tcp 0 0 0.0.0.0:5432 0.0.0.0:* LISTEN

This will tell you that PostgreSQL is listening for incoming connections on all network interfaces.

For a short test, login to the database by using the previously created database user mapuser:

psql mapdb mapuser

Type \d to see a list with the two tables which we have created some steps above (geometry_columns and spatial_ref_sys). Then logout with \q

If you encounter any problems, you may find a solution here: PostGIS.

Prepare Download Directory

Our example directory for downloading OSM data and generating the data base contents will be "/usr/share/mapgen". So, create this directory and jump into it:

sudo mkdir /usr/share/mapgen cd /usr/share/mapgen

Afterwards, change the access rights of this directory so you have write access with your regular user. For example:

sudo chmod a+rwx .

For security reasons, do not forget to revoke write access as soon as the installation is complete:

sudo chmod -R go-w /usr/share/mapgen # do not run this command now, run it later! sudo chown -R root:root /usr/share/mapgen # do not run this command now, run it later!

If you have chosen a different directory, then you need to adjust all cd /usr/share/mapgen commands in the following statements.

osm2pgsql Configuration

Before using osm2pgsql for the first time, we need to configure two things. For this we need two files which are missing from Kai Krueger's osm2pgsql package for 12.04. It is included in later versions of the package (which are for later Ubuntu versions). Get the saucy version and extract the following files from the /osm2pgsql-0.84.0 folder of the tar archive:

- 900913.sql

- default.style

You can place them anywhere you like. Default locations are /usr/share/osm2pgsql/default.style and /usr/share/doc/osm2pgsql/examples/900913.sql, but they can just as well go in /usr/share/mapgen.

The file "default.style" controls which combinations of OSM data primitives and tags get imported in the database. Any tag/primitive combinations not specified there will not get imported into the database and therefore will not get rendered!

If you modify the file, it is recommended that you make a copy of it and work with that, as the original (if it was installed by the package manager) may get reset or removed by the package manager at the next upgrade. You can keep differently named copies for different purposes (e.g. if you set up more than one database).

In order to allow old-style transport route tagging (ways and nodes tagged line=*) to be accepted by the renderer, append the line

node,way line text linear

You can do this with the editor of your choice (don't forget to use sudo) or by executing these commands:

cd /usr/share/mapgen sudo echo -e "\nnode,way line text linear\n" >> ../osm2pgsql/default.style

For a piste map, we would need to add these lines:

node,way piste:type text linear node,way piste:lift text linear node,way piste:difficulty text linear node,way piste:name text linear node,way piste:ref text linear

If the style file contains any lines ending in phstore, comment them out. Since we have not enabled hstore, they will cause the import to abort with an error.

Now the database needs to be initialized for Spherical Mercator projection:

cd /usr/share/mapgen psql mapdb mapuser -f /path/to/900913.sql

osmfilter

This tool is used to speed-up the PostgreSQL import process. It filters out the tags we are interested in (which we need to specify on the command line). So if you intend to render only a small subset of the available data rather than everything, running the OSM file through osmfilter before passing it to osm2pgsql will make osm2pgsql run faster. On top of this, database queries will be faster too, so the rendering process will be speeded-up as well.

On the other hand, it does discard some data contained in the original OSM data, so be sure to include in the filter everything you might want to render. If you're unsure about what to render or not, it is probably better not to use osmfilter but limit data to a particular area. (Which I am going to do, since all I need is a proof of concept.)

Downloading the binary of osmfilter (to get the 64 bit version replace "32" by "64"):

cd /usr/share/mapgen wget -O osmfilter http://opengastromap.de/osmfilter_ubuntu/osmfilter32 chmod ug+x osmfilter

To use this program we will need a compressed delimiter file. Let's create two versions of it.

cd /usr/share/mapgen echo "<osmfilter_pre/>" |gzip -1 >lim.gz

For further details on this tool refer to osmfilter.

Mapnik Tools

We already installed Mapnik. Now we should care about some additional files we will need in this context.

cd /usr/share/mapgen svn export http://svn.openstreetmap.org/applications/rendering/mapnik svn export http://mapnik-utils.googlecode.com/svn/tags/nik2img/0.8.0/ mapnik-utils cd mapnik-utils sudo python setup.py install

Coastlines

Mapnik needs external shape-file data for coastlines at lower zoom levels; let's download them now. Afterwards we have to unpack the files and dissolve possible subdirectories. The whole procedure can be accomplished by one of the OSM scripts:

cd /usr/share/mapgen cd mapnik ./get-coastlines.sh rm *.tar.bz2 *.tgz *.zip

In case this did not work, go the manual way:

cd /usr/share/mapgen mkdir mapnik mkdir mapnik/world_boundaries cd mapnik/world_boundaries rm ../world_boundaries/* wget http://tile.openstreetmap.org/world_boundaries-spherical.tgz wget http://tile.openstreetmap.org/processed_p.tar.bz2 wget http://tile.openstreetmap.org/shoreline_300.tar.bz2 wget http://www.naturalearthdata.com/http//www.naturalearthdata.com/download/10m/cultural/10m-populated-places.zip wget http://www.naturalearthdata.com/http//www.naturalearthdata.com/download/110m/cultural/110m-admin-0-boundary-lines.zip tar -xjf *.tar.bz2 tar -xzf *.tgz bunzip2 *.bz2 unzip *.zip rm *.tar.bz2 *.tgz mv */* . rmdir * 2>/dev/null

Mapnik Initialization

To use Mapnik, some initializations are to be done.

cd /usr/share/mapgen cd mapnik ./generate_xml.py --host localhost --user mapuser --dbname mapdb --symbols ./symbols/ --world_boundaries ./world_boundaries/ --port '' --password ''

The osm.xml file contains all the settings needed to render the familiar Mapnik view as on the OSM home page. Even if you do not intend to use it, it is probably a good idea to keep it around so you can test your rendering chain with a "known-to-work" XML.

If you want to create your own map view:

- Create a copy of osm.xml and tweak it according to your needs or

- Get a copy of an XML file which does something similar to what you have in mind, and tweak it as needed or

- Create your own XML file from scratch (most difficult).

Afterwards, we can edit the xml file and tweak it to our needs - or rewrite it from scratch if we want to create specialized maps. We can have multiple files of different names for different rendering styles.

Prepare the Website

The web server Apache will expect our web content at "/var/www". Note that you will need write-access rights in this directory. If these rights have not been set automatically during Apache installation, you may want to do this by hand:

sudo chown root:www-data /var/www sudo chmod g+rwx /var/www sudo chown root:www-data /var/www/index.html sudo chmod g+rw /var/www/index.html sudo usermod -aG www-data $USER

To get these changes to work, please relogin.

Having done this, change to that directory and download all necessary files.

cd /var/www mkdir img wget http://openlayers.org/dev/theme/default/style.css wget http://www.openlayers.org/api/OpenLayers.js wget http://www.openstreetmap.org/openlayers/OpenStreetMap.js wget -P img http://www.openstreetmap.org/openlayers/img/blank.gif wget -P img http://www.openstreetmap.org/openlayers/img/cloud-popup-relative.png wget -P img http://www.openstreetmap.org/openlayers/img/drag-rectangle-off.png wget -P img http://www.openstreetmap.org/openlayers/img/drag-rectangle-on.png wget -P img http://www.openstreetmap.org/openlayers/img/east-mini.png wget -P img http://www.openstreetmap.org/openlayers/img/layer-switcher-maximize.png wget -P img http://www.openstreetmap.org/openlayers/img/layer-switcher-minimize.png wget -P img http://www.openstreetmap.org/openlayers/img/marker.png wget -P img http://www.openstreetmap.org/openlayers/img/marker-blue.png wget -P img http://www.openstreetmap.org/openlayers/img/marker-gold.png wget -P img http://www.openstreetmap.org/openlayers/img/marker-green.png wget -P img http://www.openstreetmap.org/openlayers/img/measuring-stick-off.png wget -P img http://www.openstreetmap.org/openlayers/img/measuring-stick-on.png wget -P img http://www.openstreetmap.org/openlayers/img/north-mini.png wget -P img http://www.openstreetmap.org/openlayers/img/panning-hand-off.png wget -P img http://www.openstreetmap.org/openlayers/img/panning-hand-on.png wget -P img http://www.openstreetmap.org/openlayers/img/slider.png wget -P img http://www.openstreetmap.org/openlayers/img/south-mini.png wget -P img http://www.openstreetmap.org/openlayers/img/west-mini.png wget -P img http://www.openstreetmap.org/openlayers/img/zoombar.png wget -P img http://www.openstreetmap.org/openlayers/img/zoom-minus-mini.png wget -P img http://www.openstreetmap.org/openlayers/img/zoom-plus-mini.png wget -P img http://www.openstreetmap.org/openlayers/img/zoom-world-mini.png

Note: sometime in early 2014, Firefox started acting up when loading transparent layers from the test server, displaying pink tiles for each transparent layer until the layer is disabled once and the re-enabled. This appears to be an incompatibility between recent (since early 2014) Firefox versions and old (in my case, late 2012) OpenLayers versions. Getting the latest OpenLayers version has fixed this for me.

Now edit index.html and insert the contents below (remove the existing content; if the file does not exist, create it):

<html>

<head>

<title>My OSM Lab Site</title>

<link rel="stylesheet" href="style.css" type="text/css" />

<script src="OpenLayers.js"></script>

<script src="OpenStreetMap.js"></script>

<script type="text/javascript">

// Start position for the map (hardcoded here for simplicity)

var lon=10.27;

var lat=47.13;

var zoom=14;

var map; //complex object of type OpenLayers.Map

//Initialise the 'map' object

function init() {

map = new OpenLayers.Map ("map", {

controls:[

new OpenLayers.Control.Navigation(),

new OpenLayers.Control.PanZoomBar(),

new OpenLayers.Control.Permalink(),

new OpenLayers.Control.ScaleLine(),

new OpenLayers.Control.Permalink('permalink'),

new OpenLayers.Control.MousePosition(),

new OpenLayers.Control.Attribution()],

maxExtent: new OpenLayers.Bounds(-20037508.34,-20037508.34,20037508.34,20037508.34),

maxResolution: 156543.0399,

numZoomLevels: 19,

units: 'm',

projection: new OpenLayers.Projection("EPSG:900913"),

displayProjection: new OpenLayers.Projection("EPSG:4326")

} );

var tiles = new OpenLayers.Layer.OSM("Local (tiles)","tiles/${z}/${x}/${y}.png");

map.addLayer(tiles);

layerMapnik = new OpenLayers.Layer.OSM.Mapnik("OSM Mapnik");

map.addLayer(layerMapnik);

layerMapnik = new OpenLayers.Layer.OSM.Mapnik("OSM Mapnik, light");

layerMapnik.setOpacity(0.4);

map.addLayer(layerMapnik);

layerCycleMap = new OpenLayers.Layer.OSM.CycleMap("CycleMap");

map.addLayer(layerCycleMap);

layerCycleMap = new OpenLayers.Layer.OSM.CycleMap("CycleMap, light");

layerCycleMap.setOpacity(0.4);

map.addLayer(layerCycleMap);

var blank = new OpenLayers.Layer.OSM("No Background","img/blank.png");

map.addLayer(blank);

// var newLayer = new OpenLayers.Layer.OSM("Public Transport", "tiles/${z}/${x}/${y}.png",

// {numZoomLevels: 19, alpha: true, isBaseLayer: false});

// map.addLayer(newLayer);

var switcherControl = new OpenLayers.Control.LayerSwitcher();

map.addControl(switcherControl);

switcherControl.maximizeControl();

if( ! map.getCenter() ){

var lonLat = new OpenLayers.LonLat(lon, lat).transform(new OpenLayers.Projection("EPSG:4326"), map.getProjectionObject());

map.setCenter (lonLat, zoom);

}

}

</script>

</head>

<!-- body.onload is called once the page is loaded (call the 'init' function) -->

<body onload="init();">

<!-- define a DIV into which the map will appear. Make it take up the whole window -->

<div style="width:100%; height:100%;" id="map"></div><br/>

</body>

</html>

You can later modify the HTML to suit your needs - you can find further information on that here.

Create the Map

This chapter will show you how to create or update the tiles, which will be assembled into the map by the browser on the user end. We assume that all tasks of the previous chapter have been completed successfully.

The process of map creation involves two steps – filling the database and rendering the map tiles.

Fill the Database

All information we need to create the map tiles must be written into our database. To do this, we need to develop a script which performs several tasks, step by step. Please do not try to create and run the whole script from scratch. It is better to run-through the process step by step, entering every single command at the command line terminal. That makes it much easier to find errors.

Get the Planet File

Since this is only a proof of concept, we will not operate on the whole planet file but get an extract of the area we are interested in. There are a bunch of providers serving regularly-updated extracts of whole countries or continents.

For very small areas, like a sample area for our proof of concept, our options are:

- For areas up to the size of a small town, the API using the Export tab on the OSM page. Note that this should not be done on a regular basis (see also API usage policy), but for a one-time download of a small area it should be OK.

- The TRAPI is another candidate. It is designed specifically for rendering tiles: some information (such as object versions) are omitted, and the bounding box requested is always rounded up to tile borders. Its purpose is really to serve data for T@H renderers - but I did not find an explicit usage policy and I figured a one-time download of a constrained area would be OK.

- The XAPI is for read-only queries and will allow downloads of much larger areas than the API. As of January 2011, though, it is still having problems and I did not manage to download an area from it. Download URLs are of the form http://xapi.openstreetmap.org/api/0.6/map?bbox=10.137,47.0944,10.6,47.1591 (replace the bbox arguments with the area you want to download).

- OSM Server Side Script also provides a way to download data from a mirror site; however, requesting data is not all that trivial.

- And, last but not least, if you've recently edited an area in JOSM and happened to save the data to disk (be sure to do that once more after finishing the last upload, as local-only edits will cause hiccups), of course you can use that file as well.

Copy the downloaded file into /usr/share/mapgen.

If we intend to render up-to-date information, the first task of our script will be to download the OSM file of the area we are interested in:

cd /usr/share/mapgen wget -O - http://download.geofabrik.de/osm/europe/germany.osm.bz2 |bunzip2 |gzip -1 >a.osm.gz

Although the file is already available in compressed form, we decided to recompress it, because gzip works a lot faster then bzip2.

Filter the Planet File

As long as we are still tweaking Mapnik around, we can skip this step and just rename the downloaded OSM file to gis.osm.

Once we are set up, we will need to do hierarchic filtering because there will be nodes in ways, ways in relations and relations in other relations. For performance reasons a pre-filtered file will be used for interrelational filtering as there is no need for considering nodes or ways in the first filtering stages. In this example we decide to filter public-transport specific information because we want to create a public transport map.

zcat a.osm.gz|./osmfilter --drop-nodes --drop-ways|gzip -1 >r.osm.gz zcat lim.gz r.osm.gz r.osm.gz r.osm.gz a.osm.gz lim.gz a.osm.gz|./osmfilter -k"amenity=bus_station highway=bus_stop =platform public_transport=platform railway=station =stop =tram_stop =platform =rail route=train =light_rail =subway =tram route=bus =trolleybus =ferry =railway line=rail =light_rail =subway =tram =bus =trolleybus =ferry =railway" >gis.osm

Eventually we will get the file gis.osm, containing only that information we need. The time needed for this process depends heavily on the size of the downloaded area - which is why it is best to start out with a really small area.

Merging OSM Files

If you want to import multiple OSM files, you need to merge them prior to import. (This may be needed if you are using planet extracts, and the region you are render is much smaller than the smallest planet extract containing all of it, thus assembling it from smaller planet extracts will take up less space in your database.)

The following example will merge three OSM files:

osmosis --rx 1.osm --rx 2.osm --rx 3.osm --merge --merge --wx merged.osm

Each --rx option specifies one input file. Files can be in plain OSM format or compresses (gzip or bzip2). The --merge option tells osmosis to merge two streams – note that this option is specified twice here as we have three input files: the first merge operation will merge the first two input files, the second merge operation will take the output of the first one and merge it with the next file. As a rule, to merge n files, you need to specify --merge n–1 times. Finally, the --wx option specifies the file to write the content to. Add a .gz or .bz2 extension to compress it.

When working with compressed files, something like this may be more efficient:

bzcat 1.osm.bz2 | osmosis --rx --file=- --rx 2.osm --rx 3.osm --merge --merge --wx --file=- | bzip2 > merged.osm.bz2

What we have done here is to uncompress one of the input files and pipe it into osmosis, then pipe the output through bzip2. The bzip utilities are more efficient than the libraries osmosis uses, resulting in a performance gain. (The same works for .gz compression, just use bunzip and bzip instead.) However, when we have multiple input files, we can speed up just one in this manner. To maximize performance gains, pick the largest input file, and when your input files are in different compression formats, prefer .bz2 files over .gz files (since the more efficient compression of bz2 comes at the cost of reduced speed).

Transfer the Data to Postgres Database

Now we can transfer the OSM data from the file gis.osm into the Postgres database. The program osm2pgsql will do this job. If you created your own input style earlier, be sure to specify it on the command line, else use the supplied one. Change the name of the OSM file as needed:

cd /usr/share/mapgen osm2pgsql -s -C 700 -d mapdb -U mapuser -S ../osm2pgsql/default.style gis.osm

osm2pgsql can also take compressed (osm.bz2) data directly. This is useful if you want to process downloaded data unfiltered.

This can take a long time. Main factors are:

- the uncompressed size of the original OSM data

- the amount of data filtered out (the more we discarded earlier, the less data we are feeding into the DB)

- the amount of memory available and the size of the cache (-C parameter)

- disk performance

- whether we are running on a physical or a virtual machine (a VM is much slower here)

For a detailed analysis, see Frederik Ramm's presentation Tuning the Mapnik Rendering Chain.

An OSM extract of Germany, filtered as in the above example, should take only a few minutes on a physical machine. An unfiltered OSM extract of Austria can take over two hours on a VM.

Render the Tiles

Test the Chain

First we should test if the renderer works as expected. The nik2img program will help us do this. Let's pick an area for which we have already imported GIS data – set the coordinates with the -c parameter and the zoom with -z. Generate a test image.

As of December 2012, the Mapnik stylesheet contains some bugs and incompatibilities with the osm2pgsql style in the Ubuntu 12.04 LTS repositories. I therefore skipped the test with osm.xml (since currently I have no plans of using that style in any renderings) and instead ran some tests with the Mapquest style as described in User:Stanton/Using the Mapquest Style.

cd /usr/share/mapgen cd mapnik nik2img.py osm.xml image.png -c 10.27 47.13 -z 16 -d 256 256 --no-open -f png256

Open the test image in the application of your choice and verify everything is OK.

If you get an error message, re-run the nik2img command with the -v parameter - this may give you an idea at which step the error occurs. The steps which you should be getting:

- nik2img started

- Format: (your selected output format)

- Loading mapfile

- Loaded style XML

- Setting map view

- Zoomed to (estimated) max extent

- Zooming to center

- Finished setting extents

- SRS

- Map extent

- Map long/lat bbox

- Map center

- Map long/lat center

- Map scale denominator

- Extent of all layers

- Long/lat extent of all layers

- Long/lat center of all layers

- Layers intersecting map

- At current scale of...

- Layer visible (typically multiple steps, one for each layer in the style XML)

- Starting rendering

- Finished rendering map

I got a "PSQL error: timeout expired" after the fourth step (setting the map view) the first times I tried in my QEMU; after rebooting the issue disappeared.

If you've created your own Mapnik style, repeat the procedure using your own style XML instead of osm.xml.

Generate Map Tiles

If the test run was successful, the next step should be generating map tiles. For this purpose, we use the script generate_tiles.py with some modifications. For this purpose, make a copy and name it generate_tiles_custom.py. Edit the script with the editor of your choice.

cd /usr/share/ptgen cd mapnik chomd a+x generate_tiles_custom.py vi generate_tiles_custom.py

First limit the number of threads to spawn - the recommendation is to keep them roughly equivalent to the number of CPU cores, so we should set it to 1 for our VM. Find the definition of NUM_THREADS (line 13 in my case) and change it as shown below. (The default is 4, which may cause timeouts as the single virtual CPU core can't keep up with the load of four simultaneous threads.)

NUM_THREADS = 1

First, the bounding box must be adjusted to our map area. Delete all the lines below # Start with an overview. Replace the deleted lines with these (do not change the 4 spaces indent):

force = int(os.environ['F'])

x1 = float(os.environ['X1'])

y1 = float(os.environ['Y1'])

x2 = float(os.environ['X2'])

y2 = float(os.environ['Y2'])

minZoom = int(os.environ['Z1'])

maxZoom = int(os.environ['Z2'])

bbox = (x1,y1,x2,y2)

render_tiles(bbox, mapfile, tile_dir, minZoom, maxZoom)

Then go up and replace the definition of the function loop (it starts with def loop(self): and ends with self.q.task_done()). Replace the whole definition by these lines (do not change the indents):

def loop(self):

while True:

#Fetch a tile from the queue and render it

r= self.q.get()

if r==None:

self.q.task_done()

break

(name,tile_uri,x,y,z)= r

try:

mtime= os.stat(tile_uri)[8] # ST_MTIME

except:

mtime= 961063200 # June 2000

if z<=12 or (mtime>=946681200 and mtime<=978303540) or force==1:

# low Zoom OR mtime between Jan 2000 and Dec 2000

self.render_tile(tile_uri,x,y,z)

self.q.task_done()

Now start the script:

cd /usr/share/mapgen cd mapnik F=1 X1=10.137 Y1=47.0944 X2=10.3183 Y2=47.1591 Z1=5 Z2=15 MAPNIK_MAP_FILE="osm.xml" MAPNIK_TILE_DIR="/var/www/tiles/" ./generate_tiles_custom.py &

Depending on the hardware, size of the area and zoom levels selected, it may take several hours to render all the tiles. (My example took around an hour to render, in the setup described above.)

In case you want to abort the rendering, you have to kill the python process (using the kill command or Gnome System Monitor) because Ctrl-C does not work here. (This is why you should add the final ampersand - that way the shell will output the renderer's process ID, run it in the background and return to the command line where you can issue the kill command if needed.) In my case, the process would render but then hang and not terminate - however, this happens only with one of my sample areas and not on the other.

To tweak the rendering, set the environment variables preceding the call to generate_tiles_custom.py accordingly:

- F Force rendering. If 1, existing tiles will be overwritten; if 0, tiles will get rendered only if they don't exist yet, are very old (creation timestamp between Jan and Dec 2000) or the zoom levels is lower than 12 (hardcoded in our modified script).

- X1, Y1, X2, Y2 Bounding box of area to render (coordinates). Make sure that X1 < X2 and Y1 < Y2 (tried and true for northern latitude, eastern longitude) - if the renderer generates just an empty folder hierarchy without any bitmaps in them, try swapping these parameters.

- Z1, Z2 Minimum and maximum zoom levels to render

- MAPNIK_MAP_FILE Style XML to use for Mapnik

- MAPNIK_TILE_DIR Directory where generated tiles will be placed. If the directory does not exist, it will be created.

If you use multiple style XMLs for offering multiple map views, run the script multiple times, each time specifying a different combination of MAPNIK_MAP_FILE and MAPNIK_TILE_DIR.

Test the Map

Point your browser to your web server. For the VM setup which I used, the address would be http://localhost:8038. Zoom to an area within your bounding box, in any of the zoom levels you decided to render. Activate your layer, pan and zoom around a little to make sure everything works as expected.

Add More Layers

If you are rendering multiple sets of tiles with multiple Mapnik styles, add them to your HTML file.

For a base layer, add something like the following:

var myLayer = new OpenLayers.Layer.OSM("My Layer","mylayer/${z}/${x}/${y}.png");

myLayer.setOpacity(0.4); // for a "lighter" layer, leave out otherwise

map.addLayer(myLayer);

For an overlay, the code snippet would be:

var myLayer = new OpenLayers.Layer.OSM("My Layer", "mylayer/${z}/${x}/${y}.png",

{numZoomLevels: 19, alpha: true, isBaseLayer: false});

map.addLayer(myLayer);

Look for similar code sections in the HTML file and add your snippet there. The order in which layers are listed in the selection is the one in which they appear in the script (except that base layers will always be shown before overlays).

The above snippets assume the variable name myLayer for your layer object (change it to anything you want; it is recommended to use a separate variable for each layer). It is furthermore assumed that your tiles reside in /mylayer on your web server - again, change to match the actual path. Last, set the display name from "My Layer" to something meaningful.

Live Rendering with LiveTiles TileLite

LiveTiles is an easy way to render tiles just as they are requested. While the "just in time" rendering approach is not suitable for a production server, it is useful if you are developing map styles and would like to quickly see what your new style looks like.

TileLite is an easy way to render tiles "ad hoc" when speed to first rendering is a priority. It allows us to try out styles easily.

First install libapache2-mod-wsgi:

sudo apt-get install libapache2-mod-wsgi

Then install tilegen:

cd /usr/share/mapgen wget http://bitbucket.org/springmeyer/tilelite/get/tip.zip unzip tip.zip mv *tilelite* tilelite cd tilelite

Now edit tilelite.py: in line 15, change

import mapnik

to

import mapnik2 as mapnik

Then do:

sudo python setup.py install

Now make one copy of tiles_app.py for each style you are going to serve. At the end of the copy, replace /home/mapnik/osm.xml with the full path to your style file. As a convention, I suggest naming the script which corresponds to foo.xml tiles_foo.py and so on.

For each script copy, add a file in /etc/apache2/conf.d. (Again, I suggest that the config file that goes with foo.xml and tiles_foo.py be named tilelite_foo.) The content of the file is as follows:

WSGIScriptAlias /<url> /usr/share/mapgen/tilelite/tiles_foo.py

WSGIDaemonProcess <process name> user=www-data group=www-data processes=10 threads=1

WSGIProcessGroup <process name>

<url> can be any path on your web server, I recommend it contain foo (name of your style) somewhere in the name. Likewise, change tiles_foo.py to the actual name of your script.

<process name> is an arbitrary (but unique) name for the process. I recommend tilelite_foo.

The user and group values can be set to any valid user with the necessary permissions to render tiles. www-data should work on a standard Ubuntu setup.

When you are done, run

sudo apache2ctl configtest sudo /etc/init.d/apache2 restart

Now edit /var/www/index.html and add the new layers.

Open your test machine's site in your browser and look at the result. If you are getting blank images, richt-click one and select "View Image" to troubleshoot.

Utilities on the host system

There are a few tools and tricks which can make your testing life a lot easier. Since this setup assumes a test system with no GUI, you will need to set them up on the host system. If you can't or don't want to do that, you can also install this stuff on a separate machine or another VM (though running two VMs off the same host system can drastically slow things down). None of these is really needed - each section explains how to achieve similar results using nothing other than what is installed on the test machine.

Mapnik Viewer

No longer available on Ubuntu 12.04 LTS.

Mapnik Viewer allows you to quickly render a map to test a new style. If you can't or don't want to install Mapnik Viewer locally, you can still render some test areas using nik2img.py on the test system.

- On Ubuntu, just install mapnik-viewer; all necessary packages will be installed automatically. On other OSes, your mileage may vary...

- If you have set up PostgreSQL as described above, you can share Mapnik XML files between the host system and the guest system. If the port or authentication settings vary between host and guest system, you need to adapt these parameters in the XML files - depending on whether you are going to use them on the host or guest system. Alternatively, you can reconfigure PostgreSQL to use a different port and/or require authentication on the guest system.

- For debugging, it may help if you start mapnik-viewer from a terminal window - that way, you will get status and error messages in the console window that you would otherwise miss.

- A problem in the current mapnik-viewer is that, upon loading a map, it zooms to all available data. The console window will give you the bounding box coordinates, but in the spatial reference system in which the coordinates are stored in the database - which is not very intuitive. You'll end up relying mostly on visual orientation - but that works only if your map contains data to show even at low zoom levels.

OpenOffice.org/LibreOffice PostgreSQL driver

If you are using OpenOffice.org or LibreOffice, installing the PostgreSQL driver lets you use Base as a front end for firing test queries at the database. Alternatively, you can always run psql ptgis ptuser on the console and enter queries on the command line.

- On Ubuntu, install libreoffice-sdbc-postgresql (on older versions with OpenOffice.org, use openoffice.org-sdbc-postgresql).

- Windows users can download the driver from http://dba.openoffice.org/drivers/postgresql/index.html

- MacOS X users will need to resort to the JDBC driver found at http://jdbc.postgresql.org/download.html (this driver will also work on other OSes). Functionality may differ between the SDBC and JDBC drivers.

To set up the connection:

- After starting Base, select "Connect to a database"

- Next, select the postgresql driver

- The connection parameters for the setup described here are host=localhost port=5432 dbname=ptgis

- The next step of the wizard will ask for the user name, in our example that would be ptuser

- Registering the database in OpenOffice.org is not required for this to work, though you may do so if you wish.

- Save the database (the file will hold only your settings but no data).

Now you can go to the Queries tab and click Create query in SQL view... A new window will open, where you can enter SQL queries. Be sure to activate Run SQL command directly (the SQL button at the very right of the top toolbar, or the respective command in the Edit menu) - not doing so may cause your query to fail with a non-obvious syntax error. Clicking Run Query (or hitting F5) will execute the query and display the results at the top of the window, while in the bottom half you can tweak your query and re-run it anytime you wish.

A tutorial is available at: http://www.postgresonline.com/journal/archives/8-Using-OpenOffice-Base-2.3.1-with-PostgreSQL.html

Experiments

Time to start playing around...

Mapnik in b/w

I want to render public transport lines on a somewhat neutral map background. Kay Drangmeister's OSM Parking Map has a Mapnik background in black and white, which seems to be just perfect for that purpose. It is generated by a Python script, which is available on OSM SVN. This script will also convert Mapnik symbolizers to black and white (ImageMagick must be installed for this to work).

There are also two ready-made black-and-white style files in the tree, but the Python script has the side effect of merging all included files (which may contain site-specific parameters) into the main file, so the pre-rendered files are useless unless we're running in a 100% clone of the author's environment.

Lesson learned: either generate the target XML in-place (on the server, in the dir which holds the original style file), or copy the whole structure of the style file and all dependencies (typically the inc and symbols subdirs) to the dir in which you will run this script. Otherwise the script may fail because files are missing, or the resulting style file will give errors (won't find database, icons missing etc.).

That being said, here's what to do: First get the interesting stuff from SVN:

cd /usr/share/ptgen cd mapnik svn export http://svn.openstreetmap.org/applications/rendering/parking/mapnik/mapnik-to-bw.py svn export http://svn.openstreetmap.org/applications/rendering/parking/mapnik/pxdom.py sudo apt-get install imagemagick

The parameters to call the py script are:

- -s Directory in which the original style file resides, default is current dir

- -f Filename of original style file, default is osm.xml

- -d Output dir, default is /tmp

The script will create two dirs, bw-mapnik and bw-noicons, in the destination path. Each contains a style file and a subdir with the converted icons.

python mapnik-to-bw.py -d .

Images are converted first, then the XML file is processed - the latter takes longest and the script seems to be doing nothing at the beginning, all you'll notice is that the processor load is constantly at 100%. The whole process is time-consuming - it takes over an hour on my VM - but you will need to repeat this process only if you decide to modify your original style file or the conversion script itself.

If you used any paths relative to /usr/share/ptgen/mapnik in your style file, the newly-generated one will interpret that relatively to its own path. This is an issue with world_boundaries, fix that by creating a symlink:

cd /usr/share/ptgen/mapnik/bw-mapnik ln -s ../world_boundaries world_boundaries cd ..

Now we can try to render the area with nik2img:

nik2img.py bw-mapnik/osm-bw.xml map_osm-bw.png -c 10.27 47.13 -z 16 -d 1024 768 --no-open -f png256

Repeat the same procedure for bw-noicons:

cd /usr/share/ptgen/mapnik/bw-noicons ln -s ../world_boundaries world_boundaries cd .. nik2img.py bw-noicons/osm-bw-noicons.xml map_osm-bw-noicons.png -c 10.27 47.13 -z 16 -d 1024 768 --no-open -f png256

Examine the output. I concluded that the bw-mapnik output is more suited to my needs - it's a 100% clone of the original style sheet, except that it is in black and white. (The bw-noicons map contains the same set of map features but displays no icons.) My aim is to have basically a Mapnik view, with most (not all) features rendered in black and white, as well as a reduced set of POIs.

Selective Treatment of Map Features

As mentioned above, I want to generate the style file for my map background automatically from the current default style. Map features present in that style file should be treated in three different ways, depending on the feature:

- Copy as-is (maintaining color, icons etc.)

- Convert to black-and-white (area style, icons and text)

- Drop the map feature altogether

This will need some adaptations to Kay's script. It would be most convenient to have a "meta-style" telling the script how to treat which map feature - this would have the advantage of easily allowing re-configuration if new map features are added to the main style sheet or the conversion logic is to be altered.

But first we will need some background knowledge on the Mapnik style file used by OSM - namely, its XML structure.

- Map is the top level XML element , the container for everything else.

- FontSet elements are children of Map, the current osm.xml includes them from a separate file. We can leave these alone for now.

- Style elements are also children of Map. Each style has a name and determines how to render certain map elements.

- Each style element can have multiple Rule elements. These can specify zoom factors at which they apply, as well as extra filters on the map features for which they are used. They contain one or more child elements which correspond to lines, areas, text or icons that will be added to the map.

- Layer elements are also children of Map. Each layer has a name, a visibility setting and a projection method. It corresponds to a group of (ideally related) map features. Children of each Layer are:

- StyleName elements (one or more) - these determine which Style is applied to the map features on the layer.

- Datasource specifies where the data for these map features comes from - shapefiles for coastlines, our PostGIS database for most other map features. All of this is found inside Parameter child elements. For PostGIS data, generic database settings are included from other files, only the table parameter (a SQL query which will fetch the actual objects to be processed on this layer) is specified directly.

Looking at all this, color/icon conversion rules are most easily applied to Styles, being the first named element around the actual format rules. Dropping map features can be implemented in different ways:

- by eliminating a layer

- by setting a layer's visibility to no

- by eliminating all Rules from the corresponding Style or

- by eliminating the corresponding Style (and all references of any layer to it)

Note that when multiple layers use the same style the first two ways may produce different results from the last two.

My strategy is to control all format conversion via Styles - this way, conversion rules can easily be changed between "keep as-is", "convert to b-w" and "drop" while minimizing the potential for unexpected side effects.

I eventually went for the last strategy: In order to drop map features, I remove the corresponding style from the file. From the Layers I delete all references to that style. If this leaves a layer without any styles, I delete that layer as well.

A minor difficulty is the current (as of January 2011) structure of osm.xml: In some cases huge groups of map features are clustered into the same style where I need different conversion strategies - one example being leisure, which contains most amenities and landcovers. We will need to split such styles in two.

After modifying the script in the intended way and trying it out, I found the background still to prominent and distracting from the line information. As a quick workaround I set its opacity in OpenLayers to 0.6 - still legible (better than Openptmap, which uses 0.4), but more subtle than the full color set. This works for web viewing, but for map export the opacity needs to be "baked in", which requires modifying the style sheet. So the script needs to provide the additional capacity of modifying the brightness (which is basically what happens when overlaying a semitransparent image over a white background).

The Meta-Style

The meta-style file looks like this:

<metastyles>

<!-- The single root element -->

<metastyle name="default" default-strategy="color" default-brightness="0.4" background="bw" background-brightness="0.4">

<!-- A metastyle (at least one is required, it is possible to have more than one) -->

<!-- name is a unique identifier of the metastyle (so the script can be instructed to apply this particular style) -->

<!-- default-strategy is the transformation strategy to be applied to all styles not listed explicitly -->

<!-- color: keep the style with its original colors -->

<!-- bw: convert all colors to grayscale and use grayscale versions of icons -->

<!-- drop: do not include this style in the target stylesheet -->

<!-- default-brightness is the brightness correction to be applied to all styles where it is not specified explicitly -->

<!-- 0: keep brightness intact -->

<!-- negative: darken (-1 is pure black) -->

<!-- positive: brighten (1 is pure white) -->

<!-- if not specified, 0 is assumed -->

<!-- background is the transformation strategy to apply to the map background (color or bw) -->

<!-- background-brightness is the brightness to apply to the map background -->

<!-- if not specified, default-brightness (or 0, if not defined) is used) -->

<transform style="amenities" strategy="bw" brightness="0.4"/>

<!-- How to transform a particular style. This one converts the amenities style to grayscale and raises brightness by blending with 40% of pure white -->

<!-- style is the name of the style -->

<!-- strategy is the transformation strategy to apply (same logic as default-strategy) -->

<!-- brightness is the brightness correction to apply (if omitted, default-brightness is used, otherwise same logic as default-brightness -->

<transform style="stops" strategy="drop"/>

<!-- the style "stops" will be dropped, i.e. its elements will not be rendered -->

<transform style="water" strategy="color"/>

<!-- the style "water" will be rendered in its original colors -->

<!-- note this is identical to default-strategy, so removing this line will not have any effect -->

</metastyle>

<metastyle name="minimal" default-strategy="drop" background="color">

<!-- this metastyle will render only what is explicitly included below -->

<!-- ... -->

</metastyle>

</metastyles>

The Script

The conversion script is a Python script based loosely on Kay's aforementioned script. You can find it in my git repository

The script is called from the directory which holds the original style file and takes the following input parameters:

- -i Input file (default is osm.xml)

- -o Output file (default is osm-bw.xml)

- -s symbols directory (which contains all map icons, default is symbols)

- -b Directory in which converted b/w icons will be placed (default is bw-symbols, will be created if it does not exist)

- -m Meta-style file (default is metastyle.xml)

- -n Name of meta-style to use (default is default)

It has been tested only for the case in which the input file, the output file, the metastyle file, the symbols dir and the bw-symbols dir are all located directly in the current directory. Using more complex (including absolute) path names for any of the above may work (to some extent it probably will) but has not been tested.

Grouping Stops

I want to copy Öpnvkarte's excellent feature of rendering a group of adjacent stops of the same name as a single point or area. For this, we will need to use some PostGIS aggregate functions.

To get a feeling for it, we'll fire some manual queries at our database. Connect to your PostGIS database using OpenOffice Base or the database console (see above) and enter the following query. Set the name column to a name which you know exists multiple times in your dataset, and don't forget the terminating semicolon (this will tell the DBMS that you're done entering your query and want to see the results).

select * from planet_osm_point where name='Piazza Firenze';

If you use the console, the output is most likely too wide for your screen and you'll find it hard to make anything of it. But we'll try anyway: the top rows are the column names. Below that are the rows returned; column widths are identical. With some imagination you should be able to figure out which columns actually contain useful data, and then retry the query asking only for those columns:

select osm_id, highway, name, railway, ref, way from planet_osm_point where name='Piazza Firenze';

If your console window has a sufficient number of columns, you should now get a legible table. (Otherwise make the console window wide enough for the text to fit and retry.) You should now clearly see the node IDs and tag values of the selected points. However, the way column is just a cryptic hex string. Let's make that legible using the ST_AsText function:

select osm_id, highway, name, railway, ref, ST_AsText(way) from planet_osm_point where name='Piazza Firenze';

Now the last column should contain values like:

POINT(1019353.90189595 5698723.5701023)

So we are looking at a point. The values in parentheses are the coordinates - although not in degrees but in meters. The first is the longitude - our point is some 1000 km east of Greenwich. The second value is the latitude - some 5700 km north of the equator. We're looking at a point which is just a little more than 9 degrees east and just above 45 degrees north - that is, it is located in Milan, Italy.

The Perfect Solution

Now here comes the first part of the magic: We want an area which is just big enough to enclose all the points we got in this query. For this, we repeat the query as a group query, grouping by the name column. We use the ST_Collect aggregate function to gather all the points in a single row, and transform them into a polygon using the ST_ConvexHull function - this function generates the smallest convex polygon which encloses all points passed to it. (An enclosing ST_AsText will help us make it legible again.)

select name, ST_AsText(ST_ConvexHull(ST_Collect(way))) from planet_osm_point group by name where name='Piazza Firenze';

Bingo! What we get is a single row: the name is Piazza Firenze and the second column contains a POLYGON made up of the points we got before (or some of them, the missing ones would then be inside that polygon).

If we would rather have a centroid (the central point) instead of the whole area, we will need a different function, called ST_Centroid:

select name, ST_AsText(ST_Centroid(ST_Collect(way))) from planet_osm_point group by name where name='Piazza Firenze';

Again, the result should be one row. The first column is the name, and the second column contains the coordinates of the centroid. If you look at is closely, you will see that the coordinates are somewhere in between those you got in the second example.

However, there's a catch: this works so nicely because we are working with a very constrained area - a single city, in that case. If you were to do the same on a bigger region, you would find the database happily merging the stops of two nearby villages because both happen to be named "Church". And public transport companies do their own share to add to the confusion: Vilnius has two bus stops named "Šilo", each located at a different end of Šilo gatvė, at a distance of roughly 3 km. So even if we filtered by city, we'd still end up lumping these two together.

Our best bet is to filter on spatial information. Unfortunately, the GROUP BY clause does not understand criteria like "groups of objects within X meters of one another" - we will have to make that information more digestible for a database.

We're essentially talking about a universal mathematical problem here: cluster analysis. Compared to the common case, we do have an advantage as in our case the clusters (groups of stops belonging together) are visible to the naked eye - the distance between any two points in the cluster is likely to be significantly smaller than the distance between two disjoint clusters, so the way different algorithms handle ambiguous cases make less of a difference for us.

Algorithms which may fit our purposes are:

- QT clustering algorithm - clustering data based on maximum cluster diameters (i.e. maximum distance from one stop to another)

- CURE data clustering algorithm - preprocessing for large sets of data

Turns out this will require some complex code - if we do this within the SQL query (if at all possible), it's going to slow down rendering. The other option is preprocessing of the data.

A Quick and Dirty Solution

For the click functionality (returning the entire stop when one of its poles is clicked), things are easier: we have one element (the stop that was clicked) and can search for all stops of the same name within a certain range around it. We'll get to that later; first of all, we need to render the map.

A simplified approach for rendering, which may do for now, would be to assign a "rank" to each stop, depending on the service (e.g. 1 for bus, 2 for tram, 3 for subway, 4 for suburban railway). The Openptmap style does something like this.

Using these ranks, we would render stop names only if there is no other stop of a higher rank and with the same name within a certain range. For a group of 2 tram and 4 bus stops at least we would render the name only twice instead of six times. Since tram stops "win" over bus stops, the name would be rendered in the more prominent style for tram stops.

As a refinement of the above, we could reduce the two labels to one by rendering labels only when there is no nearby stop of the same name with a higher rank OR the same rank and a lower ID. It would make make the label position somewhat arbitrary (it could be on an edge of the stop group rather than in its center) but results should be usable.

A query to return stops with a weight could look like this:

select osm_id,way,name,highway,railway,aerialway,amenity,case

when railway='station' then 1

when railway='halt' then 2

when railway='tram_stop' then 3

when amenity='bus_station' then 4

when aerialway='station' then 5

when highway='bus_stop' then 6

else 9 end as rank

from planet_osm_point

where (highway in ('bus_station','bus_stop') or amenity='bus_station' or

railway in ('station','halt','tram_stop') or aerialway='station')

and not coalesce(disused,'')='yes'

There's a catch: since the rank column is calculated on the fly, we cannot filter on it in the WHERE clause (a condition for filtering out stops based on the value of that column).

The other possible approach, creating a temporary table using the above syntax and a WITH clause, fails because the Mapnik/PostGIS rendering chain appears to insist that every table used (including temporary tables, subqueries and views) be registered in geometry_columns. The only exception seem to be (physical) tables which store no geometry information. The most convenient way to get stops in the intended manner is probably to define the query shown above as a view and registering it in geometry_columns.

The following commands will do that. Drop them into a text file and save it as stop_view.sql (e.g. in the /usr/share/ptgen dir).

create view planet_osm_stop as

select osm_id,way,name,highway,railway,aerialway,amenity,case

when railway='station' then 1

when railway='halt' then 2

when railway='tram_stop' then 3

when amenity='bus_station' then 4

when aerialway='station' then 5

when highway='bus_stop' then 6

else 9 end as rank

from planet_osm_point

where (highway in ('bus_station','bus_stop') or amenity='bus_station' or

railway in ('station','halt','tram_stop') or aerialway='station')

and not coalesce(disused,'')='yes';

insert into geometry_columns(f_table_catalog, f_table_schema, f_table_name, f_geometry_column, coord_dimension, srid, "type")

select '', 'public', 'planet_osm_stop', 'way', ST_CoordDim(way), ST_SRID(way), GeometryType(way)

from planet_osm_stop LIMIT 1;

The above processes only planet_osm_point, which contains only point geometries. As a refinement, include a UNION with the same query against planet_osm_polygon to the view. Convert the osm_id column to negative values for polygons to ensure IDs are unique: Duplicate IDs may later lead to duplicate labels - admittedly, only if the name and rank are also the same and they are both close to each other.

Run the commands using:

psql ptgis ptuser -f stop_view.sql

Now insert the following query as the datasource for stop labels in the Mapnik style:

(select osm_id,way,name,highway,railway,aerialway,amenity,rank

from &prefix;_stop rs

where not exists (

select * from &prefix;_stop cs

where ((cs.rank < rs.rank) or ((cs.rank = rs.rank) and (cs.osm_id < rs.osm_id)))

and (cs.name = rs.name)

and (ST_Distance(rs.way,cs.way) < 250)

)

) as stations

Try it out with nik2img. It turns out that the use of spatial function is not extraordinarily expensive; a simple public transport style using it still renders significantly faster than OSM's default style.

Points to consider and possible optimizations:

- If you see some labels missing, this might have to do with Mapnik's collision avoidance algorithm. Since the only label is now placed at a fairly random location among the individual stops, it is quite likely to collide with other labels or point symbolizers. You may therefore want to allow overlapping labels at higher zoom levels (around 16, possibly using a lower threshold for more "prominent" stations).

- For debugging purposes, just allowing overlapping in general will do.

- If you allow overlapping and render stop levels with different styles for different modes of transport, be sure to include the rank column as a filtering criterion. Otherwise, stops served by more than one mode of transport will render with multiple overlapping labels.

- Using the OSM ID to single out one stop from each group of the same rank is fairly arbitrary; possibly using the location is a better idea. For instance, if labels render above the stops, pick the northernmost stop when in doubt. You will still need the osm_id for filtering as the latitude and longitude are not guaranteed to be unique.

You may want to try this out on some spread-out clusters of stops in an area you know and tweak some parameters until you are getting the desired result:

- Maximum distance between stops: Try to find something above the maximum diameter of a stop group but below the minimum distance of two unrelated stops of the same name.

- Which labels to render at which zoom level

- Zoom level at which labels should be allowed to overlap

This can take a decent amount of time...

A Nicer Solution

After trying a few things, then leaving the problem alone for a while, then trying again I came up with a solution which might work satisfactorily:

For each stop, get a list of all "neighbors", i.e. stops with the same name and within a predefined distance from each other (250 m for now). The IDs of these neighbors are stored in an array (I guess this is the part that is so hard to come up with if you've been infiltrated with the notion of first normal form for many years).

A second, recursive (or rather, iterative) pass then goes through the neighbor lists, looks at each neighbor and merges the neighbors' lists of neighbors into the list. This continues until there is nothing more to add.

A group is then defined as follows: All stops in the group have the same name, and each stop is within 250 m of at least one other stop in the group. Note that two randomly chosen stops may be further than 250 m apart. In theory, this kind of clustering may degenerate into arbitrarily long chains of stops. That would, however, only happen if all stops in that chain were to have the same name, which is unlikely to occur in practice. Hence such degeneration is a negligible risk - unless you choose an insanely high minimum distance somewhere in the kilometer range.

Now, as a first step, let's gather the list of neighbors:

select s1.osm_id as osm_id,s1.name as name,array_agg(s2.osm_id) as neighbors

from planet_osm_point s1, planet_osm_point s2

where (s1.highway in ('bus_station','bus_stop') or s1.amenity='bus_station' or

s1.railway in ('station','halt','tram_stop') or s1.aerialway='station')

and not coalesce(s1.disused,'')='yes'

and (s2.highway in ('bus_station','bus_stop') or s2.amenity='bus_station' or

s2.railway in ('station','halt','tram_stop') or s2.aerialway='station')

and not coalesce(s2.disused,'')='yes'

and s1.name = s2.name

and (ST_Distance(s1.way,s2.way) < 250)

group by s1.osm_id, s1.name;

This works but returns a lot of duplicates - a group of five stops would give us five rows, each with a different osm_id but all having the same list of neighbors. We can filter out identical lists and just keep the one with the lowest ID. That requires a DISTINCT ON clause for the neighbors column; sorting by the osm_id column ensures that we pick the first (lowest) ID. Caveat: the criteria for the DISTINCT ON clause need to be the first criteria in the ORDER BY clause, else PostgreSQL will throw an error (this is not documented in the PostgreSQL 8.4 docs where I'd expect it to - next to the documentation for DISTINCT ON - a Google search pointed me to the 9.0 docs, where this is mentioned).

select distinct on (neighbors)

s1.osm_id as osm_id, s1.name as name, array_agg(s2.osm_id) as neighbors

from planet_osm_point s1, planet_osm_point s2

where (s1.highway in ('bus_station','bus_stop') or s1.amenity='bus_station' or

s1.railway in ('station','halt','tram_stop') or s1.aerialway='station')

and not coalesce(s1.disused,'')='yes'

and (s2.highway in ('bus_station','bus_stop') or s2.amenity='bus_station' or

s2.railway in ('station','halt','tram_stop') or s2.aerialway='station')

and not coalesce(s2.disused,'')='yes'

and s1.name = s2.name

and (ST_Distance(s1.way,s2.way) < 250)

group by s1.osm_id, s1.name

order by neighbors, osm_id;

This works as all arrays seem to be sorted, so arrays containing the same elements are equal. (I haven't figured out why, but if it turns out that this isn't always the case, we would need to sort the neighbors array manually in the query.)

Next we need to create some tools: What we colloquially referred to as the list of stops are sets in a mathematical sense. However, PostgreSQL has no internal support for sets but only for arrays. The difference: Arrays hold elements in a defined order and can hold the same element more than once, whereas sets are unsorted and cannot contain an element multiple times. Hence:

- [1,2,2,3] is a legitimate array, but {1,2,2,3} is not a legitimate set (however, {1,2,3} is).

- The arrays [4,5,6] and [6,5,4] are different from each other, whereas the sets {4,5,6} and {6,5,4} are identical.

Arrays can be used instead of sets and PostgreSQL even comes with some set operators which can be used on arrays. When performing set operations on arrays, duplicate members and the order of members are simply ignored - that is, [1,2,2,3] will be treated as {1,2,3}

For the operations that aren't included, we need to write our own functions. Here's how:

Merging two lists of nearby stops essentially means determining the union of two sets.

create function array_join(anyarray, anyarray) returns anyarray as $$ select array(select distinct unnest($1 || $2)) $$ language sql;

Since we're going to do this across a bunch of arrays returned by a group query, we also need an aggregate function for this.

create aggregate agg_array_join(anyarray)

(

sfunc = array_join,

stype = anyarray,

initcond = '{}'

);

In order to determine whether two sets are equal, we can ensure that the arrays contain the elements in the same order and without duplicates, then do normal array comparison. The sort function is a slight modification of the array_sort function by David Fetter:

create function array_sort (anyarray) returns anyarray as $$

select array(

select distinct $1[s.i] AS "item"

from

generate_series(array_lower($1,1), array_upper($1,1)) AS s(i)

order by item

);

$$ language sql;

Finally we will generate the recursive query. For this we will use recursive common-table expressions (CTEs): In a nutshell, CTEs are views defined "on the fly" within a query and given a name. The query can then refer to the name rather than including a full subquery. Recursive CTEs are CTEs which can refer to themselves. You may want to read the PostgreSQL documentation on this if this concept is new to you.

My first shot at the full query would eventually come up with something useful, but only after a long time (~90 seconds) and the results included duplicates as well as entries with half-complete groups (essentially the output of each pass). The first optimization included eliminating duplicates from the query to get the immediate neighbors (as shown above). It also turned out that I had one apparently costly comparison operation:

where sn2.osm_id in (select unnest(sn1.neighbors))

While the duplicate elimination near-tripled the speed (from ~90 to ~35 seconds), replacing the above comparison with

where sn1.neighbors && sn2.neighbors

increased performance by a factor of 5 (~6 vs. ~35 seconds). Both operations are functionally equivalent in our case - the osm_id is always found in the neighbors array, and both are just two different ways of saying "if two groups contain the same stop, merge them".

Here's the query:

with recursive stop_neighbors as

(

select distinct on (neighbors)

s1.osm_id as osm_id,s1.name as name,array_agg(s2.osm_id) as neighbors

from planet_osm_point s1, planet_osm_point s2

where (s1.highway in ('bus_station','bus_stop') or s1.amenity='bus_station' or

s1.railway in ('station','halt','tram_stop') or s1.aerialway='station')

and not coalesce(s1.disused,'')='yes'

and (s2.highway in ('bus_station','bus_stop') or s2.amenity='bus_station' or

s2.railway in ('station','halt','tram_stop') or s2.aerialway='station')

and not coalesce(s2.disused,'')='yes'

and s1.name = s2.name

and (ST_Distance(s1.way,s2.way) < 250)

group by s1.osm_id, s1.name

order by neighbors, s1.osm_id

),

stop_group(group_id, name, members) as

(

select min(osm_id), name, neighbors from stop_neighbors

group by name, neighbors

union

select sn1.osm_id, sn1.name, array_join(sn1.neighbors, agg_array_join(sn2.neighbors)) as members

from stop_neighbors sn1, stop_neighbors sn2

where sn1.neighbors && sn2.neighbors

and sn1.osm_id <> sn2.osm_id

and not sn2.neighbors <@ sn1.neighbors

group by sn1.osm_id, sn1.name, sn1.neighbors

)

select * from stop_group

where name='Lotto (Fieramilanocity)'

limit 100;

I chose that particular stop because it is quite spread out and thus the outermost members are no longer immediate neighbors. (You can also provoke this effect by picking any large stop group and reducing the distance threshold.)

The resulting table contains the final results, mixed with intermediate ones. All we need is one row per stop, but what we get is at least one row for each record returned by the stop_neighbors query, plus another row per ID for each iteration until the member list is complete. We need to:

- Filter out all rows with partial member lists. These are easy to identify by the existence of at least one row with the same osm_id but more items in members.

- After that, keep only the "first" row (i.e. lowest group_id) for each group. This can be determined in two ways: either select only those rows in which the group_id is the smallest value in members, or normalize (sort) the members column and do a SELECT DISTINCT on the result, along with sorting by group_id.

Given that picking a single member from an array appears to be quite costly (as I learned earlier), I went for the SELECT DISTINCT approach. For this, replace the last select statement of the above query with:

select distinct on (array_sort(members)) * from ( select distinct on (group_id) * from stop_group order by group_id, array_length(members, 1) desc ) as sg1 where name='Lotto (Fieramilanocity)' order by array_sort(members), group_id limit 100;

Et voilà! We get one row with a list of all the stops.

To make this useful for rendering, we have to add the geometry of the stop group; if we want to implement different rendering rules for different types of stops, we also need to provide these.

For the geometry, PostGIS defines MULTI* types, which can hold multiple geometries of the same type (lines, points etc.), as well as GEOMETRYCOLLECTION, which can hold any combination of elements. The ST_Collect function, when run as an aggregate function on a geometry column, will collect all geometries into one of these types (the output type is determined by the set of input geometries).

ST_Collect appears not to eliminate duplicates. This is unlikely to affect our rendering purposes because:

- In order to draw stops as an area, we use the convex hull - for which it does not matter if the same item is there multiple times.

- In order to draw stops as a point, the simplest approach would seem the centroid. However, this can give unexpected results with mixed geometry types (e.g. points and areas) as only the ones with the highest dimension are used to calculate the centroid (areas are 2D, lines are 1D, points have no dimension). Hence, when a stop group consists of four points and one area, the centroid of the area would be the centroid of the stop. However, if we use the centroid of the convex hull, results should be aesthetically pleasing, and as with the area, duplicate geometries won't do any harm.

- For the label it is most convenient to use the centroid as well, so the same considerations as above apply.

Thus we can even modify our outermost query to return the convex hull.