Photogrammetry

See the 3D point cloud in a video

Photogrammetry is a technique used by many mapping agencies and companies to gather data. When you have two photos of a scene from different positions it's possible to extract the geometry of the scene. This can be done manually or by software and by using this features such as buildings, road shape and amenities can be added to the map with a high relational accuracy.

This is an extreme version of photo mapping, involving more photos (and more complicated software!) to position things very accurately. There are also similar discussions in relation to video mapping

Taking photos

I went to Market Harborough town centre and took about 65 photos of the Church from various viewing points. It's best to stand well back and get as much of the object in view as possible with maximal overlap of images, not just lots of close ups where each photo overlaps a little with the next as you might do when making a panorama.

I read somewhere along the way that a photo every 15 degrees as you walk around might work well but I'm still experimenting with this and don't have any golden rules to follow. When you look at the final output of the program you can get an idea from the numbering of the ply files which photos were used and which ones weren't, this may be the best guide to you after a while.

Don't go overboard on photos though, from my experience so far, the cpu time in seconds to crunch a set is: t = 2.5n2 + 90n

For 65 photos that equals about 80 minutes. In the end I think only 30-35 of my photos were actually used in the reconstruction so if I'd been more careful about which photos I used, I could have achieved the same results in under 10 minutes. This software isn't as optimised as Microsoft's Photosynth so don't dump 500 photos on it and expect it to finish processing in under a day. 5 well placed photos could well be better than 50 photos of rubbish.

Installation

- Install Python

- Install PIL library for Python from http://www.pythonware.com/products/pil/

- Download the osm-bundler distribution http://osm-bundler.googlecode.com/files/osm-bundler-full.zip and unpack it. Alternatively you can compose it yourself from several pieces. See http://code.google.com/p/osm-bundler/wiki/CreateDistributionYourself for the details.

Linux users may need to take two additional steps:

- Install libgfortran.so.3:

sudo apt-get install libgfortran3

- Specify where the libANN_char.so library is by typing:

export LD_LIBRARY_PATH="/pathto/osm-bundler/software/bundler/bin"

There a couple of discussions on forums about how this shouldn't be neccesary and various solutions were talked about but that didn't work for me so I just accept that I have to do this each time I want to run Bundler. If anyone knows how to fix this please let me know.

Running osm-bundler

Normally you would run osm-bundler in the following way:

python path_to/RunBundler.py --photo=<text file with a list of photos or a directory with photos>

You may try a test set composed of 5 photos: http://osm-bundler.googlecode.com/files/example_OldTownHall.zip

To see help just run:

python path_to/RunBundler.py

It make take a while to process all input photos and reconstruct sparse 3D geometry.

At the end an Exlorer window with a folder containing all processing files will be opened on Windows. A path to that folder will be printed on Linux. That folder should contain a subfolder called bundle with the output .ply files, these are built sequentially as each photo is examined. Therefore the highest numbered file will contain all the data points, there's no need to combine files.

Tracing 3D geometry outlines for OpenStreetMap

Many programs can open .ply files but the easiest we found to use was Blender. Once you've opened the program, click File>Import>Stanford PLY and select your file.

Assuming things ran as they should, you should see a point cloud that resembles the objects in the photos.

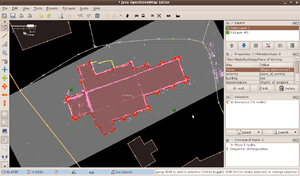

When you're ready to take a screenshot of blender, open the view menu and ensure the view is set to orthographic, not perspective. Now open JOSM, install the PicLayer plugin and import your screenshot. Assuming you got a good quality bundle you should be able to do some good tracing.

Output visualization in Google Sketchup

First install a plugin for Google Sketchup:

- Install the latest version of Google Sketchup

- Download file ply-importer.rb from here

- Put it into Google SketchUp 7\Plugins directory

Now actualy open a .ply file with the largest index:

- Start Google Sketchup

- File->Import

- Select PLY Importer(*.ply) and choose a .ply file with the largest index

Note that Google Sketchup does not have point as a 3D primitive. So called "construction points" are used to represent points. Construction points play the same role in Google Sketchup as any point in JOSM: a line can be attached to any construction point during design work in Google Sketchup.

Orientation, scale and absolute position

Note, while this software recovers 3D data from photos, it is only the relative positions of points that is recovered. Orientation, scale and absolute position are still unknown and you will need a gpx file and perhaps some reference dimensions to trace correctly.

Legality

Bundler is licensed under GPL and assuming you took the photos yourself or have permission I can't see any reason this can't be used in OSM. osm-bundler uses an opensource implementation of the patented SIFT feature extraction algorithm from VLFeat library. It's also likely other software such as ExtractSURF can do what we want which might be an avenue worth investigating. It's probably best if people don't use David Lowes SIFT to contribute data to OSM.

Example

This example was posted by Ainsworth on his OSM diary entry about Photogrammetry

The software outputs .ply files which can be opened with Blender to get an idea of how successful the bundle adjustment was. This video shows the point cloud that was created using my photos.

I cheated a little to get the data into a usable format for JOSM by taking a screen dump of Blender and using PicLayer to give me a guide. I then traced the building shape and positioned using a GPS trace from a previous visit.

See also

- Phototexturing

- Open3DMap a upcomming 3D model repository for linking 3D Objects to OSM objects

- Insight3D is another software for making point cloud using SIFT algorithm and triangulation. Has GUI. Allows to define user points in addition to automatic SIFT points and to reconstruct 3D models with photo textures. It runs on windows from the downloadable, but linux version doesn't compile easily without applying some patches. A pre-patched version is being maintained in a git repository here.

- David3D for real small objects with a webcam and a laserpointer

- Stereo very outdated

- OpenSourcePhotogrammetry

- VideoTrace unfortunatly commercial, using videosequences

- Pixelstruct

- MS Photosynth

- Multi-View Stereo for Community Photo Collections creating models out of Flickr

- Blender3D with BLAM extension allows triangulation and photo matching with the power of a full 3D suite

- 123D Autodesk App and service

- Agisoft Photoscan